In today’s rapidly evolving digital landscape, building a robust, secure, and scalable infrastructure is crucial for any modern application. Amazon Web Services (AWS) offers a powerful suite of tools to achieve these goals, but configuring and managing these resources can be complex. Terraform is an infrastructure-as-code tool that simplifies the process of creating and maintaining cloud infrastructure.

In this post, we’ll explore how to leverage Terraform to create a secure and scalable AWS infrastructure using three key components: Application Load Balancer (ALB), Auto Scaling, and SSL/TLS encryption. We’ll dive into the benefits of each of these technologies and demonstrate how they work together to create a resilient, high-performance application environment. Whether you’re a DevOps engineer, a cloud architect, or a curious developer, this guide will provide you with practical insights and step-by-step instructions to implement these best practices in your own projects.

Load Balancing

A load balancer is an important component in network infrastructure that distributes incoming network traffic across multiple servers or resources. Its primary purpose is to ensure no single server becomes overwhelmed with too much traffic, thereby improving the overall performance, availability, and reliability of applications, websites, or services. Important Load Balancers features are:

- Traffic distribution: They evenly spread incoming requests across multiple servers.

- High availability: If one server fails, the load balancer redirects traffic to healthy servers.

- Scalability: They allow easy addition or removal of servers to handle varying levels of traffic.

- Performance optimization: By distributing load, they prevent any single server from becoming a bottleneck.

- Health checks: Load balancers regularly check the health of servers and route traffic only to healthy ones.

- SSL termination: Many can handle SSL/TLS encryption, offloading this task from backend servers.

Auto Scaling

An autoscaling group is a feature in cloud computing that automatically adjusts the number of compute resources (usually virtual machines or containers) in a group based on defined conditions. It’s primarily used to ensure application availability and to optimize costs by dynamically scaling resources up or down in response to changing demand. Auto Scaling Groups features:

- Dynamic scaling: Increases or decreases the number of instances based on metrics like CPU utilization, network traffic, or custom metrics.

- High availability: Maintains a specified number of healthy instances across multiple availability zones.

- Cost optimization: Scales down during low-demand periods to reduce costs.

- Self-healing: Automatically replaces unhealthy instances to maintain desired capacity.

- Integration with load balancers: Often used in conjunction with load balancers to distribute traffic across scaled instances.

- Scheduled scaling: Can be configured to scale based on predictable load changes.

SSL/TLS

SSL (Secure Sockets Layer) and its successor TLS (Transport Layer Security) are cryptographic protocols designed to provide secure communication over a computer network. These protocols are widely used to protect sensitive information transmitted over the internet. Key features of SSL/TLS encryption:

- Data encryption: Ensures that data transmitted between the client and server is encrypted and cannot be read by third parties.

- Authentication: Verifies the identity of the server (and sometimes the client) to prevent man-in-the-middle attacks.

- Data integrity: Ensures that the data hasn’t been tampered with during transmission.

- Digital certificates: Uses certificates issued by trusted Certificate Authorities to authenticate the identity of websites.

- HTTPS: Commonly used to secure web traffic, indicated by the padlock icon in web browsers.

In the context of AWS and web applications, SSL/TLS is essential for securing data in transit, whether it’s between users and your application or between different components of your infrastructure. It’s often implemented at the load balancer level, which can handle the SSL/TLS termination, reducing the computational burden on backend servers.

The Terraform AWS Web Server Project

Now that we’ve covered the key concepts and benefits of ALB, Auto Scaling, and SSL/TLS encryption, let’s dive into the practical implementation. We’ll be using a Terraform project that sets up a secure and scalable AWS infrastructure. This project is available on GitHub at https://github.com/lgdantas/Terraform-AWS-Web-Server.

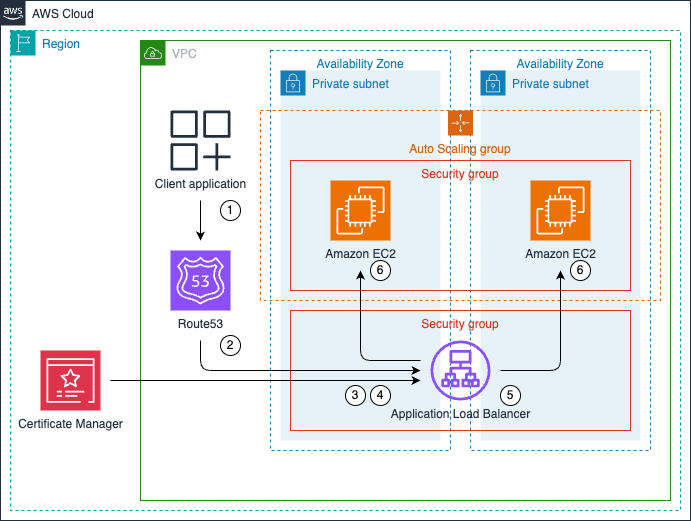

The diagram illustrates the processing flow:

- An application within the VPC initiates a TLS encrypted request to a private zone address. This request can originate from anywhere within the VPC.

- Amazon Route 53 private zone resolves the requested address to the Application Load Balancer (ALB). This ensures that the request is directed to the correct ALB within the private network.

- The ALB receives the incoming request on port 443, which is the standard port for HTTPS traffic. This indicates that the communication is encrypted using TLS.

- The ALB uses a certificate imported into AWS Certificate Manager for handling the TLS encryption. This certificate allows the ALB to decrypt the incoming request and encrypt the outgoing response.

- After processing the request, the ALB distributes it to one of the available EC2 instances in its target group. This distribution is based on the ALB’s configured load balancing algorithm, ensuring even distribution of traffic across healthy instances.

- On the EC2 instance, Nginx receives the connection from the ALB on port 80 (HTTP). Nginx then processes the request and sends back the appropriate response.

Provisioning infrastructure

Prerequisites

- Terraform installed on your local machine.

- An AWS account with a VPC and private subnets configured.

- Optional: An AWS key pair.

- Command-line access to the AWS account.

- Git client installed on your local machine.

Download

From your local machine terminal download the source:

git clone https://github.com/lgdantas/Terraform-AWS-Web-Server.gitTLS Certificate

⚠️ Important Notice – The following procedure involves using self-signed SSL certificates. These steps are intended for proof-of-concept, testing, and development purposes only. For production or long term deployments choose AWS Private CA.

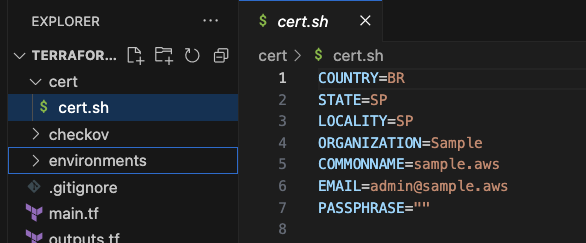

To create the self-signed TLS certificate, open the cert/cert.sh file and replace the following variables with your actual values and the name you want to give to your private domain:

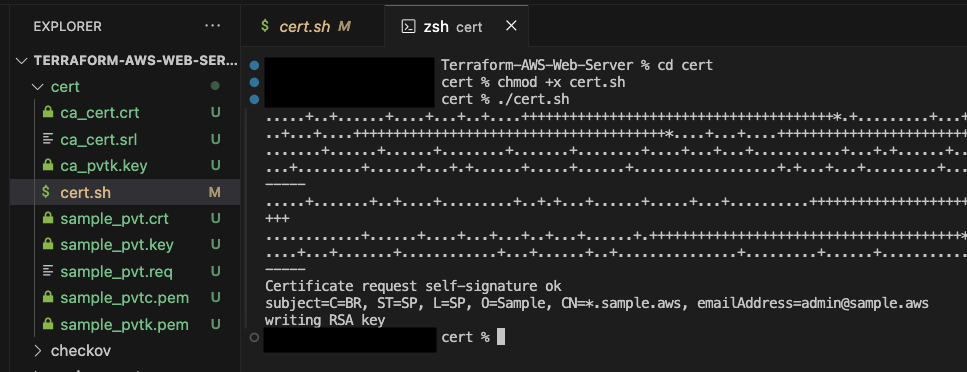

Open a terminal, change directory to cert, add execution permission to cert.sh, and run cert.sh to create the certificate and private key.

Provisioning

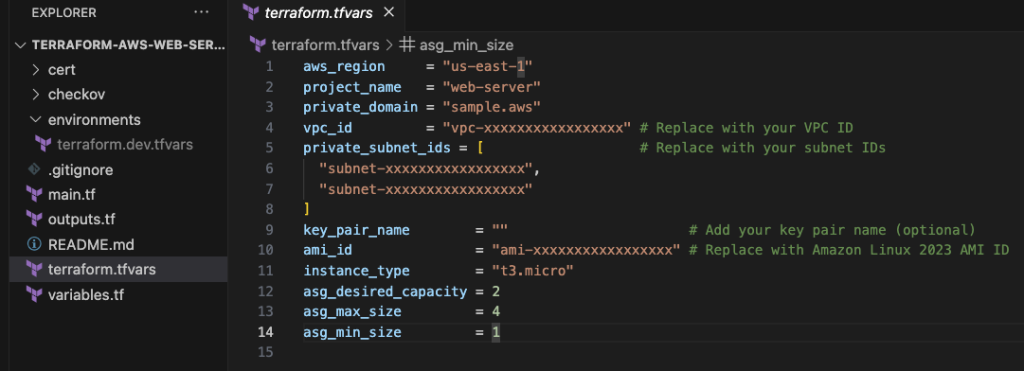

Using your favorite IDE edit the terraform.tfvars file with your AWS account parameters. Note that the private_domain must match the certificate’s COMMONNAME value:

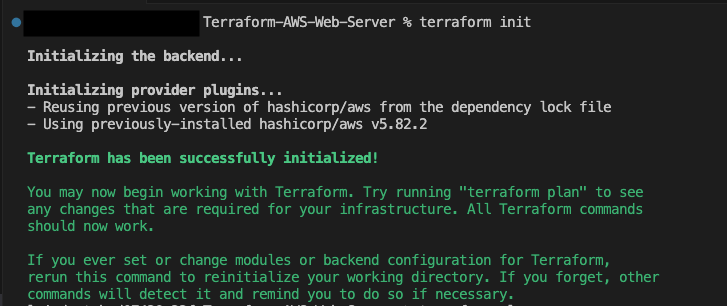

Use terraform init to initialize the Terraform project.

Use terraform plan to preview changes.

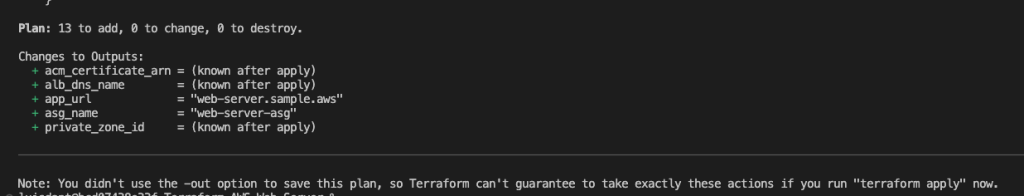

Use terraform apply -auto-approve to provision the infrastructure.

Note: The app_url will be used for testing.

Testing

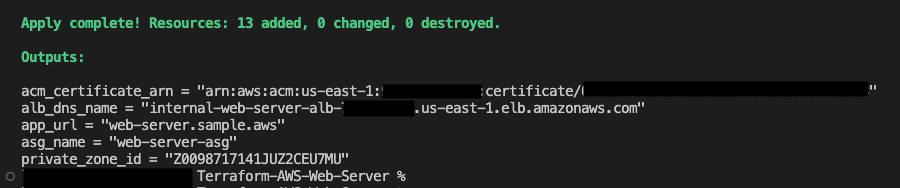

Connect to any instance running in the same VPC (including the instances created by this project) from your local machine or the AWS EC2 Console.

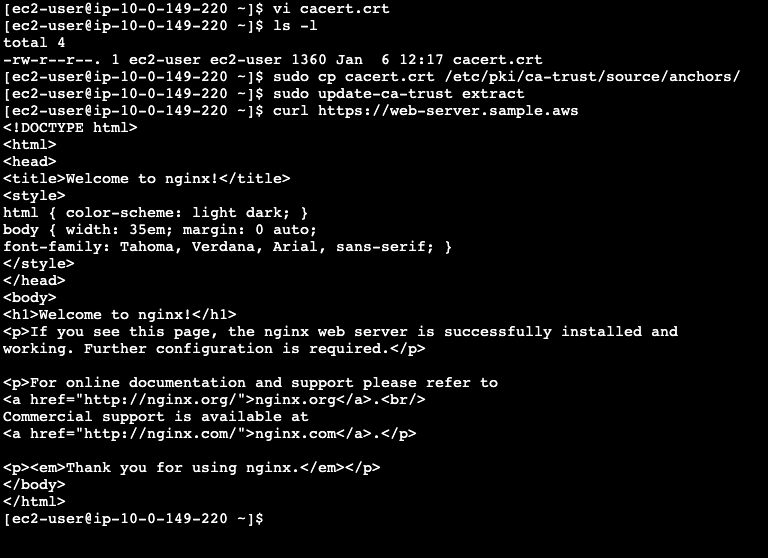

Try to curl your web server without adding the CA Root to the trusted certificates.

curl https://web-server.<your domain>

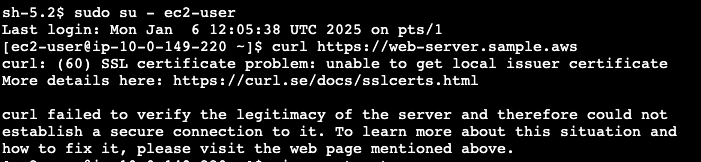

Create a file named cacert.crt and paste the content of cert/ca_cert.crt from your local project.

Add the CA Root certificate to the trusted certificates:

sudo cp cacert.crt /etc/pki/ca-trust/source/anchors/

sudo update-ca-trust extractCurl you domain again:

curl https://web-server.<your domain>

Cleaning

To decommission the infrastructure created by this project, run:

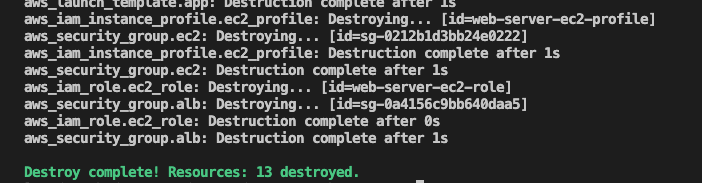

terraform destroy -auto-approve

Good job!